« Data Profiler tool Datamartist V1.4 Released | Datamartist data quality cartoons »

Data Quality Rules

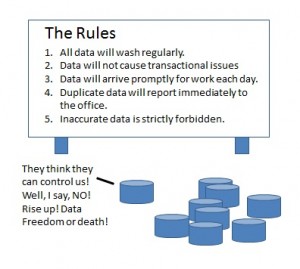

What’s the difference between good data and bad data? It is much like the difference between good children and bad children- the bad data doesn’t follow the rules.

But what are the rules? Unlike the rules for kids, which have been fixed in stone for decades (or at least, parents wish it were so), the rules for data are slippery things that depend very much on the context and the database.

While it’s a complex subject, some basic rules of thumb can avoid the deeper rabbit holes.

The first thing to understand about Data Quality rules is they aren’t as easy as they may look. Data is in theory something in the ordered world of computers, but in reality is in the “flexible” world of humans. A huge amount of data is entered by members of the group “Homo sapiens” (or mutilated by software written by members of that group) and as a result is not as ordered as we would all like.

The challenge for data quality practitioners is to remove the chaos injected by those highly involved primates (us) and make the data the sterile, ordered, never any question about anything type that we all imagine in our fantasies.

But how?

In the end, it is amazing how powerful and complex the various solutions to this problem are.

But I suggest that there are some basic principles that can help guide us.

First- do no harm.

One of the risks of any data quality initiative is that it actually screws up the data more. Don’t define rules that are so complex, and so sure of themselves that they actually make the data worse. Be humble. Don’t change data unless you are pretty sure it’s a good idea. Err on the side of not screwing up the original. And keep a copy of the original- so if things do go off the rails you can undo- or at least try to understand what when wrong.

Go out and talk to the people

Don’t sit in your ivory tower and speculate as to what the data means. Go out there and watch people enter it in. See what real world type things are happening that never make it into bits and bytes.

Attack the basics first

Focus your first efforts on dealing with the basics- they will resolve the vast majority of the issues- don’t chase after the outliers until you have the “easy” cases taken care of- the tough stuff is a case of diminishing returns- look first at how to fix processes and train your people to make the majority of typical data entry cases more accurate before you start looking into artificial intelligence based hyper-multi-semantic-algorithmic-learning-matching-holistic-flux-capacitor data quality systems.

Less is more- the fewer rules the better.

So whats the rule about making rules? Try to make less rules, and test them in a pragmatic way. It is possible to have so many rules that the rules themselves have data quality issues- don’t go there.

Sometimes the simplest things will bring the greatest benefit.

In the coming weeks, I’ll be posting about how to design, implement and monitor Data quality rules using the Datamartist tool.

« Data Profiler tool Datamartist V1.4 Released | Datamartist data quality cartoons »