« Business Intelligence Workspaces and in memory self serve analysis | Data modelling Hierarchies- how to make a dimension »

Duplicate Data and removing duplicate records

Duplicate records, doubles, redundant data, duplicate rows; it doesn’t matter what you call them, they are one of the biggest problems in any data analyst’s life.

Duplicate records, doubles, redundant data, duplicate rows; it doesn’t matter what you call them, they are one of the biggest problems in any data analyst’s life.

There are lots of different types of data quality problems, but in this post I’ll focus on Duplicates.

I’ll share some hints on how to find duplicate records and remove duplicate records, at least from your sight, if not from the source system.

Duplicate Records

A lot of the duplicate records that you’re apt to meet belong to two distinct types.

Non-unique Keys

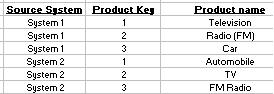

This is where two records in the same table have the same code or key, but may or may not have different values and meanings- this can happen when you’re mixing data, or data is coming from non-database sources like text files, (csv files from a csv import say), or excel files. Databases usually have some sort of unique key so don’t tend to have this problem- but if you merge data from two different databases the uniqueness might be lost- example: say you have an oracle database (System 1) and a mysql database (System 2), both of which use a “unique” integer to track products. When you merge the two, you are going to have two of everything:

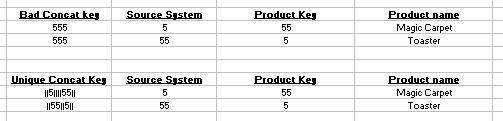

Notice I’ve added a column that specifies the source system- where the record came from- this is the first step in solving this problem- you need to Concatenate or combine the keys- although “Product Key” is not unique by itself, “Source System” + “Product Key” is unique, because each source system is internally unique. Now there is a trick to concatenation- add a string of unusual characters when combining. This ensures that by random luck the two keys don’t combine to be another duplicate key- here’s a different example that illustrates the point:

I like to use one or more of the pipe “|” character because its often not present, or even not allowed in source data and codes. Of course, you need a tool that is willing to accept that character as part of a string key for this to work. If you are doing this in excel, use the “&” to concatenate fields together and add in other characters as needed. The above example used the following syntax in the formula =”||” & A1 & “||||” & B1 & “||”

Its a trick you can use to be able to use VLOOKUP more effectively- another example- say you have a list that has first name, last name, and some address information. First Name + Last Name might not be unique on its own- throw in the street address though, and chances are you can get a more accurate list of unique people from a key point of view. Of course this doesn’t solve the John Smith, J. Smith, Johnny Smith, Johnathan Smith problem, or addresses like 123 Any Street vs 123 Any St. vs 123 Any Avenue (often all the same, with errors in data entry)… which leads us to;

Duplicate Meaning

This is more common, and sometimes harder to deal with. In example above, even though you can fix the duplicate key problem by concatonating a code for the source system to the key (along with some unused characters to ensure no “gotchas”)- its pretty clear that “Television” and “TV” are probably the same thing, and you don’t really want to see two products. These types of duplicates are often the most damaging to good analysis. Everything works, but your reports are difficult to read, or worse, you make decisions based on your “top 20 products” when in fact, 15 of them are not in the top twenty at all, because the REAL best sellers got split between “TV” and “Television” and “TV Screen” etc. Some automated duplicate detection tools exist (particularly in the area of peoples names and addresses), but in the end for many types of data its the old human eyeball that has to do the work- and you need some sort of system to keep a map of all the duplicates you’ve identified.

And obviously, you know by now where all this is going- the tool you need is the tool I’m creating; Datamartist.

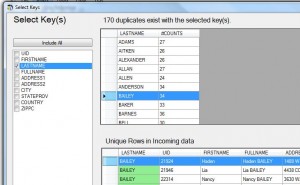

Here are some teaser screen shots of the work in progress, and examples of the functionality that deals with the two problems we’ve discussed above:

To resolve duplicate keys, Datamartist scans the data, and allows you to select keys and experiment- as many as you like (doing the concatenation trick that I described above automatically) and informs you which keys are duplicate, and shows you the duplicates for the various key combinations. If there is no way around it, you can keep a non-unique key- Datamartist will fix the reference by taking the value that is most common within a given data set and mapping attributes from that record, giving you a clean, unique reference set to work with, and eliminating that handfull of bad records that are messing things up.

To resolve duplicate keys, Datamartist scans the data, and allows you to select keys and experiment- as many as you like (doing the concatenation trick that I described above automatically) and informs you which keys are duplicate, and shows you the duplicates for the various key combinations. If there is no way around it, you can keep a non-unique key- Datamartist will fix the reference by taking the value that is most common within a given data set and mapping attributes from that record, giving you a clean, unique reference set to work with, and eliminating that handfull of bad records that are messing things up.

In the case of the second type of duplicates, Datamartist provides a filter/search capability to let you find all the duplicate rows (with “Smith” as the last name, for example). Then it allows you to identify which records are the “Master” and which are to be treated as duplicates. From that point on, the duplicates are mapped to the master, and the reference set shows a single, consistent set of data.

In the case of the second type of duplicates, Datamartist provides a filter/search capability to let you find all the duplicate rows (with “Smith” as the last name, for example). Then it allows you to identify which records are the “Master” and which are to be treated as duplicates. From that point on, the duplicates are mapped to the master, and the reference set shows a single, consistent set of data.

In both these cases, and as a general rule of how Datamartist works, the mapping and configuration you do is not lost if you change input files, or update with new data. As long as the minimum data structure consistency is there, the mapping you did stays with you, so you only have to do it once. You might need to remap some field names, but Datamartist lets you do that easily too, so the same mapping can be used to analyze many different data sets that use the same underlying keys (and would have had the same underlying data quality issues).

Download Datamartist now– see the de-duplication functionality in action.

« Business Intelligence Workspaces and in memory self serve analysis | Data modelling Hierarchies- how to make a dimension »